Hi, this week, I encountered a issue when I tried to initialize a synchronization between to database using SQL Azure DataSync.

My scenario is the following :

- One database is located on West Europe (B1)

- One database is located on North Europe (B2 – this database is sync in case of failure)

- The database represent 28 giga and about 28 000 000 lines.

The problem with the datasync is the initialization due to the high capacity of the database.

You’ve for this multiple two first scenario.

- Create the Schema on base B1

- Set all the data on B1 (these two steps can be just one with an import database from a export or backup)

- Create the schema on base B2 (because DataSync is not able to create all the constraints of the schema)

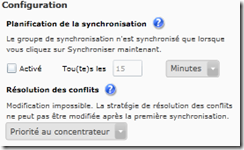

- Configure the sync

- Launch the first sync

This will result on a long transfer of data between B1 and B2. (In my case about 15-20h maybe more)

The second scenario is :

- Create the Schema on base B1

- Set all the data on B1 (these two steps can be just one with an import database from a export or backup)

- Create the schema on base B2 (because DataSync is not able to create all the constraints of the schema)

- Set all the data on B1 (these two steps can be just one with an import database from a export or backup)

- Configure the sync

- Launch the first sync

This will result on a analysis of all the data present in the tracking table of each database and a merge of the data according to the conflict priority (hub or spoke, in my case, the hub). This scenario is very very very long, it’s take more than 36h and create some latency on the database.

So I decided one last solution. Try to set the two database identical with the import of the same backup then configure the DataSync (without schedule the sync)

and then try to by pass the first init forcing the service to think that data was already sync.

For this scenario, the steps are :

- Create the Schema on base B1

- Import the database B1

- Import the database B2

- Configure the sync

- When the status of the two database on the portal is to :”ready to sync”, launch the sql script of initialization

- Launch the first sync and be happy to see just a couple of second and 0 changes. After this you’re in an incremental situation of a 25 giga database and you can schedule the sync.

The scripts is the following :

DECLARE @table_name nvarchar(255)

DECLARE @message varchar(255)

DECLARE @totalrow int

DECLARE @numberofrowtoupdate int = 10000; -- Change this number to update more rows per trans

DECLARE datasync_table_cursor CURSOR FOR

SELECT name FROM sys.tables with(nolock)

WHERE schema_name(schema_id) like '%datasync' AND name like '%dss_tracking'

OPEN datasync_table_cursor

FETCH NEXT FROM datasync_table_cursor

INTO @table_name

DECLARE @totalsumrow int

SET @totalsumrow = 0

WHILE @@FETCH_STATUS = 0

BEGIN

PRINT ' '

SELECT @message = '----- Start of update table: ' +

@table_name

PRINT @message

SET @totalsumrow = 0

DECLARE @SelectStatement NVARCHAR(2000)

DECLARE @ParameterList NVARCHAR(500)

SET @ParameterList = '@TotalRowsSelected INT OUTPUT'

SET @SelectStatement = 'Select @TotalRowsSelected = count(*) from DataSync.'+ @table_name

EXECUTE sp_executesql @SelectStatement, @ParameterList, @TotalRowsSelected=@totalrow output

SELECT @message = '----- Table rows number '+@table_name+' : ' +

CAST(@totalrow AS NVARCHAR(10))

PRINT @message

WHILE

(2 > 1)

BEGIN

BEGIN TRANSACTION

DECLARE @UpdateStatement NVARCHAR(2000)

DECLARE @FullStatement NVARCHAR(4000)

DECLARE @TotalRowsReturned int

DECLARE @rowcount int

SET @UpdateStatement =' UPDATE TOP ( ' + CAST(@numberofrowtoupdate as nvarchar(6))+ ' ) DataSync.'+@table_name + ' set [local_update_peer_timestamp] = 0,[last_change_datetime] = getdate() where local_update_peer_timestamp <> 0 '

EXECUTE sp_executesql @UpdateStatement

SELECT @rowcount = @@ROWCOUNT

SET @totalsumrow = @totalsumrow + @rowcount

SELECT @message = 'number of modified rows = ' + CAST(@totalsumrow AS NVARCHAR(10)) + ' / ' + CAST(@totalrow AS NVARCHAR(10))

print @message

--

IF @rowcount = 0

BEGIN

COMMIT TRANSACTION

BREAK

END

COMMIT TRANSACTION

END -- WHILE

SELECT @message = '-----End of table update: ' +

@table_name

PRINT @message

FETCH NEXT FROM datasync_table_cursor

INTO @table_name

END

CLOSE datasync_table_cursor;

DEALLOCATE datasync_table_cursor;

the aim is to :

- list the tracking table (using cursor)

- set the local_update_timestamp to 0 because this is the value used in the first sync. (using a loop and a update top to see the progress and also to be sure to not have any error with the logs transaction – (you can’t update million of row because the log will be full and errors will occurs)

I will update this code next week to add a summary report at the end of the scripts .

This scripts succeed in 30min for 11 000 000 of rows (2,2 giga).

For my 25 giga (approx 12 without index), the result are :

- Provisioning : 2637,14 sec(WEST) et 2797,84 sec(North)

- Script WE : 1h26min29sec

- Script NE : 2h41min20sec

- Init sync : 19,84 secondes, 0 UP 0 DL (

)

)

I save up to one day or more ![]()

Hi,

I tried to run this script but given me this error at this line :

set [local_update_peer_timestamp] = 0

The error is: Cannot update a timestamp column.

Any workaround?

Thanks